After months of speculation, OpenAI’s “Strawberry” LLM has finally arrived—and it’s not called GPT-5. In the early hours of September 13th, OpenAI unveiled the OpenAI o1 series, a large language model boasting unparalleled reasoning abilities. This model represents a significant leap forward in complex reasoning, surpassing even human PhD-level performance in some areas.

A New Naming Convention

The name itself is less significant than the shift it represents. OpenAI o1 is the first model to break from the “GPT” family, marking the beginning of a new “reasoning paradigm” distinct from the “pre-training paradigm” of its predecessors. OpenAI explains: “Reasoning represents a new frontier in AI capabilities. We decided to reset the counter to 1 and designate this series as OpenAI o1.”

OpenAI simultaneously released two models (o1-preview and o1-mini), along with over a dozen demonstration videos and a comprehensive 43-page research paper. A core feature is the models’ extended deliberation time before responding, mimicking human problem-solving. Reasoning time directly correlates with performance on complex tasks.

o1’s Capabilities

The o1 series is OpenAI’s first reinforcement learning-trained model. It generates a lengthy chain of thought before answering, significantly enhancing its capabilities. Longer chains of thought translate to longer deliberation and improved reasoning performance.

CEO Sam Altman highlighted o1’s prowess: a fine-tuned version scored in the top 49% of human competitors in the 2024 International Olympiad in Informatics (IOI) after 50 attempts per problem.

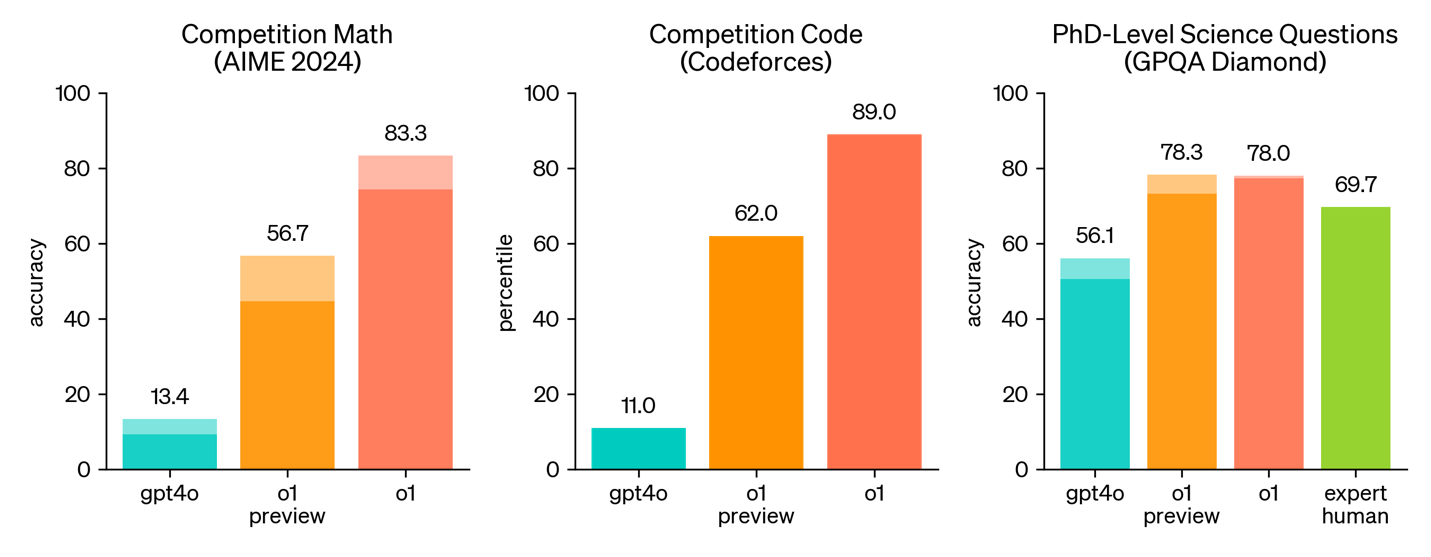

o1 also achieved top 89% performance in Codeforces competitive programming and ranked among the top 500 US students in the American Invitational Mathematics Examination (AIME). Compared to GPT-4, o1 shows substantial improvements across diverse subjects, including STEM fields, law, and economics.

What o1 Can Do

o1 retains the versatility of previous GPT models (question answering, summarization, content generation), but its advanced reasoning makes it particularly suitable for:

- Superior Reasoning: Especially strong in STEM fields.

- Creative Problem Solving: Excellent for brainstorming and ideation.

- Scientific Research: Adept at tasks like annotating complex datasets (e.g., cell sequencing) and handling intricate mathematical formulas (e.g., in quantum optics).

- Coding Proficiency: Effective code generation, debugging, and workflow assistance, performing well in benchmarks like Human Eval and Codeforces.

- Advanced Mathematics: Significantly outperforms previous models in mathematical benchmarks, achieving 83% accuracy on an IMO qualifying exam (compared to GPT-4’s 13%) and strong AIME results. Capable of generating complex physics formulas.

- Enhanced Accuracy: Incorporates self-fact-checking for improved accuracy.

The Power of Chain of Thought

OpenAI’s o1 model employs a chain of thought process to solve problems, mirroring human deliberation on complex questions. Through reinforcement learning, o1 refines its problem-solving strategies, learns to identify and correct errors, breaks down complex steps into simpler ones, and even switches approaches when necessary. This iterative process significantly enhances its reasoning capabilities. Examples of o1-preview’s chain of thought on challenging problems are provided below to illustrate this advancement. This approach mimics human cognition, enabling stepwise refinement and even self-iteration within a single problem, resulting in substantially improved reasoning.

Increased reinforcement learning during training and extended thinking time during testing further improve o1’s performance, establishing a new scaling law. However, the scaling limitations differ significantly from those of traditional pre-training methods, and OpenAI is actively researching these differences.

To visualize o1’s thinking, consider a reading comprehension question. While GPT-4 provides a direct answer, o1-preview’s “Show Chain of Thought” option reveals its step-by-step analysis. It systematically examines each option, repeatedly questioning its reasoning (“Is this a good explanation?”, “Is the writer saying that?”, “Does the context support this?”), ultimately arriving at the correct answer through iterative refinement.

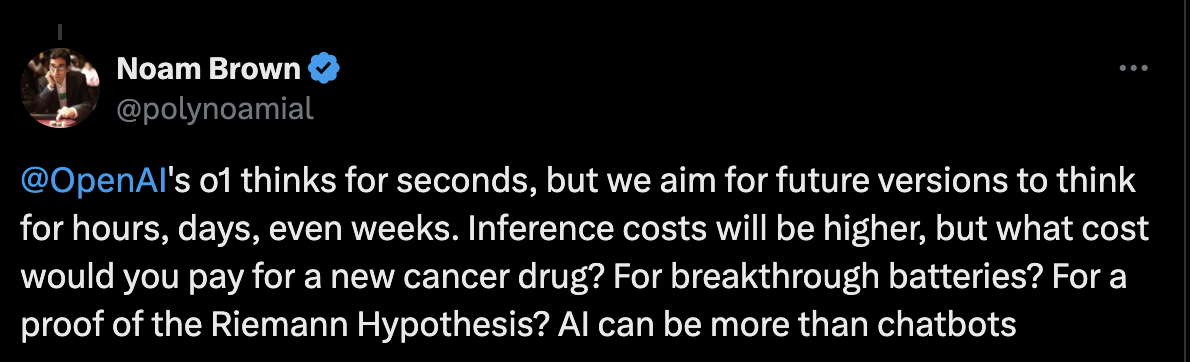

The o1 series has broad applications, assisting healthcare researchers in annotating cell sequencing data, physicists in generating complex quantum optics formulas, and developers in building and executing multi-step workflows. While current reasoning times range from a few seconds to tens of seconds, OpenAI’s future development focuses on increasing, not decreasing, this deliberation time, aiming for future versions capable of reasoning for hours, days, or even weeks. This will increase costs, but the potential for ground-breaking advancements in fields like medicine justifies the investment. The capabilities of AI extend far beyond simple chatbots.

Caution: Avoid Querying o1’s Internal Reasoning in ChatGPT

Following the launch of o1, numerous users reported receiving warnings, and even temporary bans, for attempting to access its internal reasoning process. This sparked considerable backlash.

Users triggered warnings simply by including keywords like “reasoning trace” or “show your chain of thought” in their prompts. Even indirect attempts to elicit the model’s reasoning through alternative phrasing were detected and penalized. These users aimed to extract o1’s complete internal thought process—its raw reasoning tokens—which are currently summarized in the ChatGPT interface (accessible via the expansion button).

The likely reason for OpenAI’s restrictions is the high value of o1’s reasoning process as training data for future models. Protecting this valuable intellectual property from competitors is a key motivation.

Key Considerations When Using the o1 Model

High Cost: Using the o1 model is expensive; 1 million output tokens cost $60.

Hidden Costs: The cost includes unseen reasoning tokens, adding to the overall expense.

Cost-Effective Approach: For most tasks, GPT-4 is a more economical starting point. Only use o1 when GPT-4’s capabilities are insufficient.

Future Directions

OpenAI’s unveiling of the o1 series marks a pivotal moment in AI development. The model’s superior reasoning capabilities, surpassing even human experts in certain fields, represent a significant advancement over previous GPT models.

However, the o1 model is not without its limitations. Its high cost, coupled with hidden costs associated with unseen reasoning tokens, necessitates a strategic approach to its use. Furthermore, OpenAI’s restrictions on accessing the model’s complete reasoning process highlight the ongoing challenges in balancing innovation with the protection of intellectual property. While the future potential of o1 is immense, with projected reasoning times extending to hours or even weeks, its current stage of development requires careful consideration and further exploration by the developer community to fully unlock its potential. The journey towards truly advanced AI reasoning has begun, and o1 is a significant step along the path.

Interested in exploring the potential of OpenAI’s o1 series. Learn more about its capabilities and consider how this ground-breaking technology can revolutionize your work in research, development. Contact Cloudsway for more information.