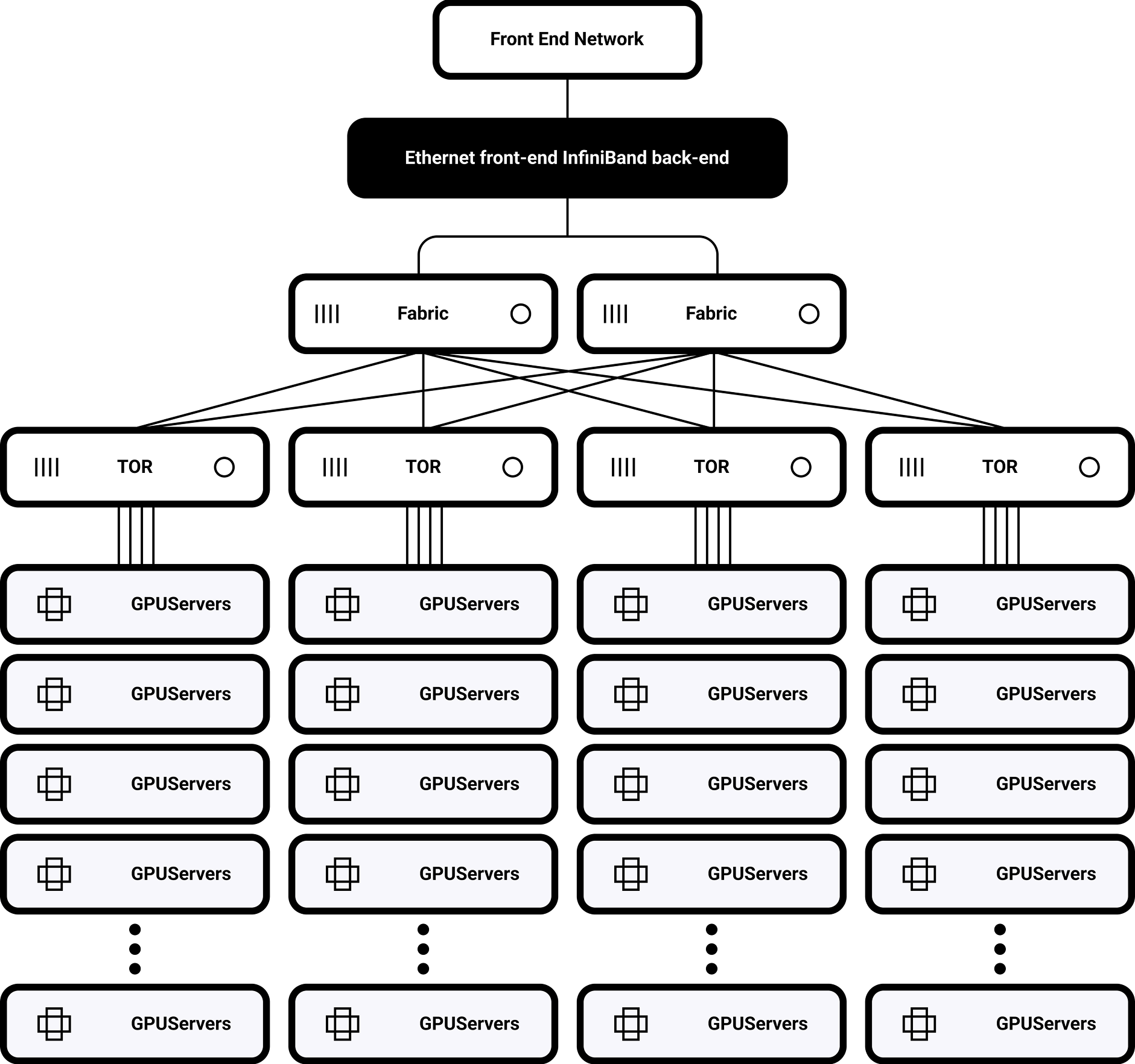

Service de mise en réseau

Dites adieu aux goulets d'étranglement en matière de communication

Développement d'un réseau de calcul AI d'une durée inférieure à la nanoseconde

Avantages technologiques

Architecture à très faible latence

Temps de latence de bout en bout aussi bas que 0,8 µs (20 fois plus rapide que le TCP/IP traditionnel)

Prise en charge des normes InfiniBand à 200/400/800 Gbps

Ordonnancement tenant compte de la topologie

Optimisation dynamique du routage et équilibrage automatique du trafic entre les racks L'optimisation en profondeur de la bibliothèque NCCL se traduit par une accélération de 40% des opérations AllReduce.

Prestations de service

Topologie à un seul rack

32 H100 GPU entièrement interconnectés via NVSwitch

Bande passante de bissection de 900GB/s

Prise en charge de la mise à l'échelle de plusieurs clusters

Routage distribué basé sur SHARP

permettant une extension à 1 000-10 000 nœuds

Caractéristiques de la solution pour la formation accélérée de grands modèles

GPUDirect RDMA et optimisation de la topologie NCCL

Résultats : Tâche d'apprentissage du modèle de paramètres 175B

le surdébit de communication a été réduit de 35% à 8%, le trafic inter-racks a été réduit de 60%

Convergence équilibrée entre le stockage et l'informatique : Les trois piliers piliers de la performance optimale

Dimensions

- Largeur de bande

- Temps de latence

- Unité d'évolutivité

Couche réseau

- 400Gbps

- 0,6μs

- 36-Port Lossless Fabric

Couche de calcul

- 1,8 To/s NVLink

- 0,3μs GPU Interconnect

- 8-GPU Node

Couche de stockage

- 320GB/s All-Flash

- 200μs Lecture aléatoire

- Espace de noms unifié 100PB