Servicio de redes

Diga adiós a los cuellos de botella en las comunicaciones

Desarrollo de una red informática AI de subnanosegundos

Ventajas tecnológicas

Arquitectura de latencia ultrabaja

Latencia de extremo a extremo tan baja como 0,8 µs (20 veces más rápido que el TCP/IP tradicional)

Compatible con los estándares InfiniBand 200/400/800 Gbps

Programación en función de la topología

Optimización dinámica del enrutamiento y equilibrio automático del tráfico entre bastidores La profunda optimización de la biblioteca NCCL da como resultado una aceleración 40% de las operaciones AllReduce

Prestaciones de servicio

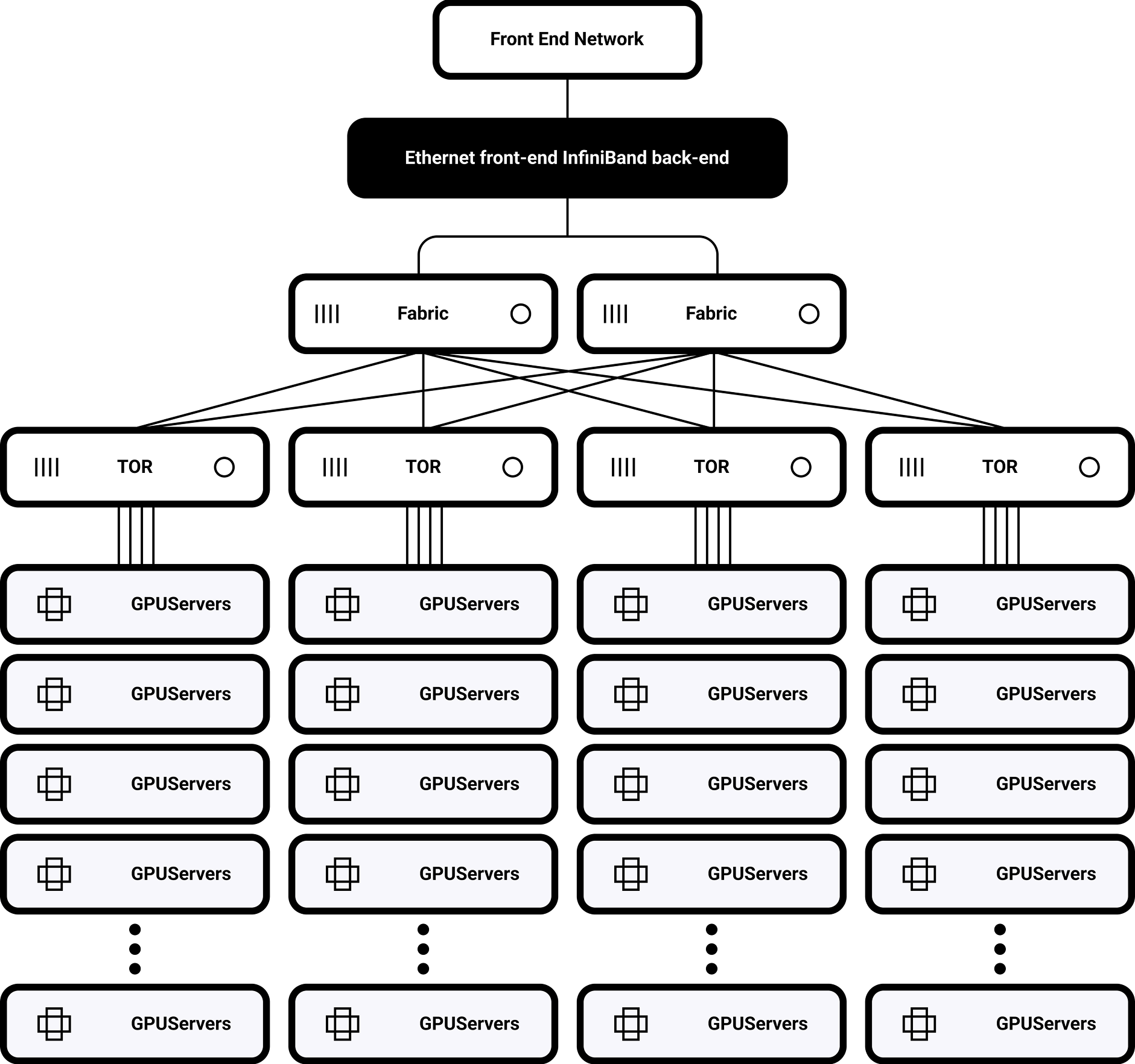

Topología de bastidor único

32 H100 GPU totalmente interconectados mediante NVSwitch

Ancho de banda de bisección de 900 GB/s

Admite escalado multiclúster

Enrutamiento distribuido basado en SHARP

permite la ampliación a 1.000-10.000 nodos

Características de la solución para el entrenamiento acelerado de grandes modelos

GPUOptimización directa de topología RDMA y NCCL

Resultados: Tarea de entrenamiento del modelo de parámetros 175B

la sobrecarga de comunicación se redujo de 35% a 8%, el tráfico entre bastidores disminuyó en 60%

Convergencia equilibrada entre almacenamiento e informática: Los tres pilares del rendimiento óptimo

Dimensiones

- Ancho de banda

- Latencia

- Unidad de escalabilidad

Capa de red

- 400 Gbps

- 0,6μs

- Tejido sin pérdidas de 36 puertos

Capa informática

- NVLink a 1,8 TB/s

- 0,3μs GPU Interconexión

- 8-GPU Nodo

Capa de almacenamiento

- 320 GB/s All-Flash

- 200μs Lectura aleatoria

- Espacio de nombres unificado 100PB